Trireme Platform Documentation

1. Introduction

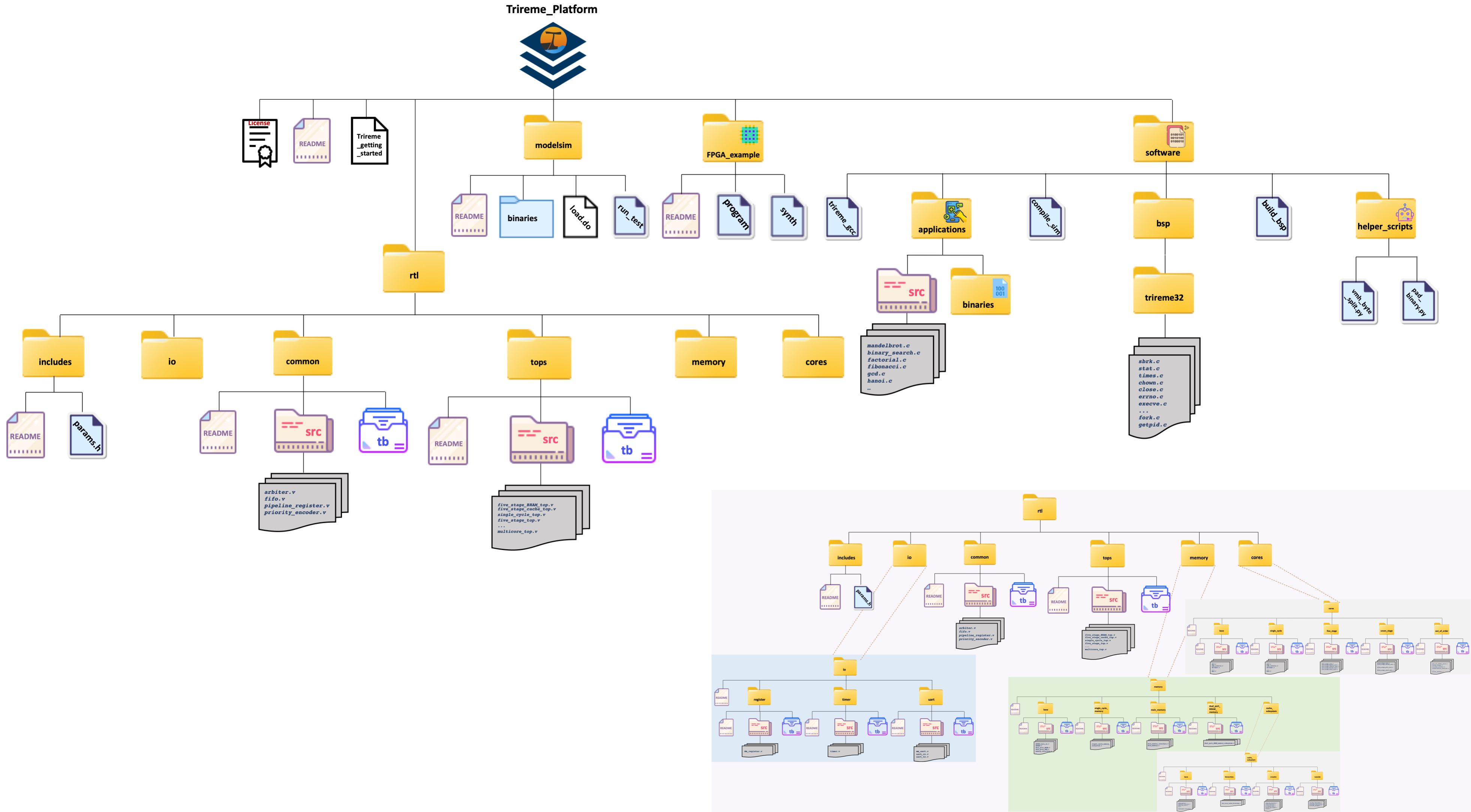

The Trireme Platform contains all the tools necessary for register-transfer level (RTL) architecture design space exploration. The platform includes RTL, example software, a toolchain wrapper, and configuration graphical user interface (GUI). The platform is designed with a high degree of modularity. It provides highly-parameterized, composable RTL modules for fast and accurate exploration of different RISC-V based core complexities, multi-level caching and memory organizations. The platform can be used for both RTL simulation and FPGA based emulation. The hardware modules are implemented in synthesizable Verilog using no vendor-specific blocks. The platform’s RISC-V compiler toolchain wrapper is used to develop software for the cores. A web-based system configuration (GUI) can be used to rapidly generate different processor configurations. The interfaces between hardware components are carefully designed to allow processor subsystems such as the cache hierarchy, cores or individual pipeline stages, to be modified or replaced without impacting the rest of the system. The platform allows users to quickly instantiate complete working RISC-V multi-core systems with synthesizable RTL and make targeted modifications to fit their needs.

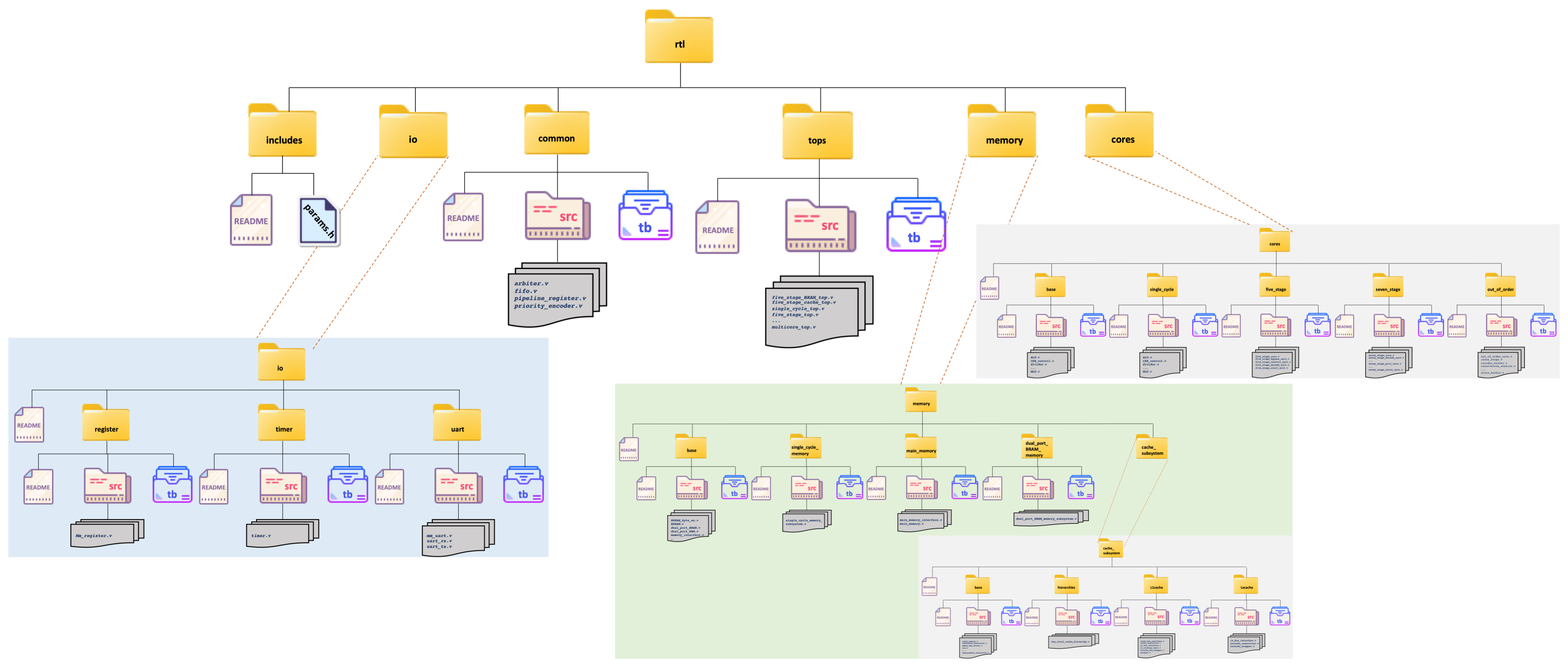

2. Trireme Platform Folder Structure

The complete Trireme Platform code base can be accessed through its GitHub repository. The code base directory structure is show in the figure below. A concerted effort was made to have README in each subfolder to ease the understanding and usage of hardware modules and the supporting software ecosystem.

3. Quick Start

The single-cycle core with FPGA BRAM memory is a good demonstration system to introduce yourself to the Trireme Platform. The single cycle BRAM top module is in the rtl/tops/src directory. Test benches for the whole processor system are in the rtl/tops/tb directory. There are several tests with the name tb single cycle BRAM top PROGRAM. Each test bench simulates the execution of a different test program.

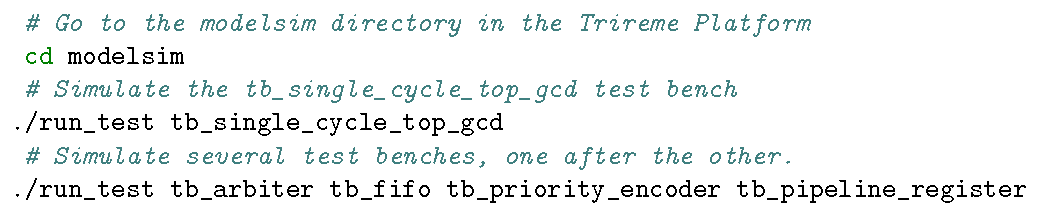

The Trireme Platform includes scripts to run simulations in Modelsim from the command line. To use the scripts in the modelsim directory, install Modelsim Altera Starter Edition.

The scripts should work with any version of the Altera Starter Edition of Modelsim. We have tested it with version 10.3d released with Quartus II 15.0. To run an example test bench, navigate to the modelsim directory and run:

./run_test tb_single_cycle_BRAM_top_gcd

If your environment is set up correctly, you should see output similar to the right.

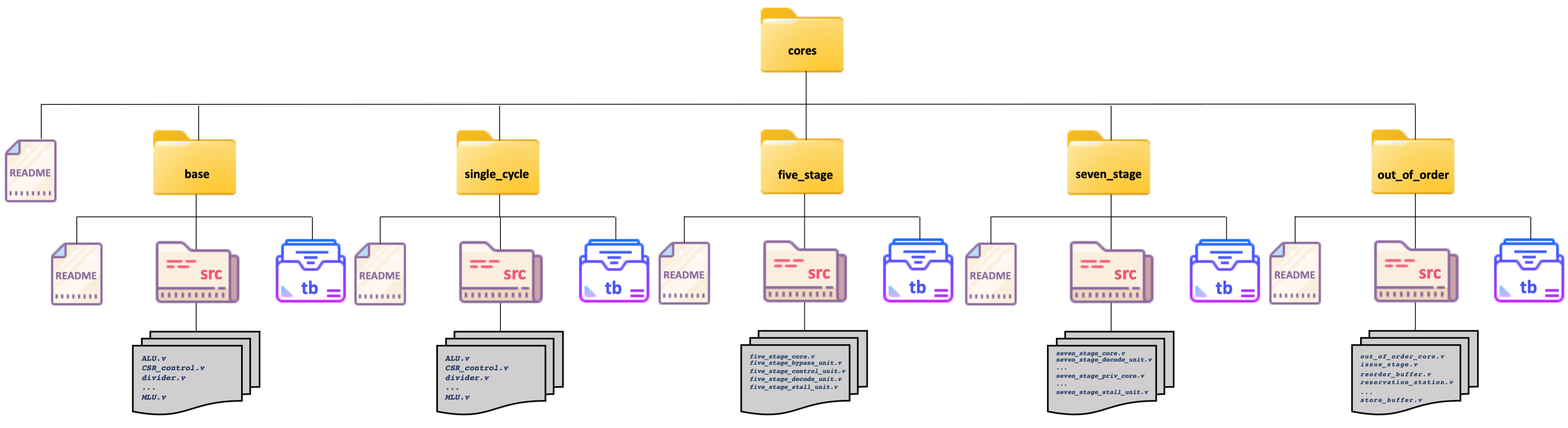

4. Core Description

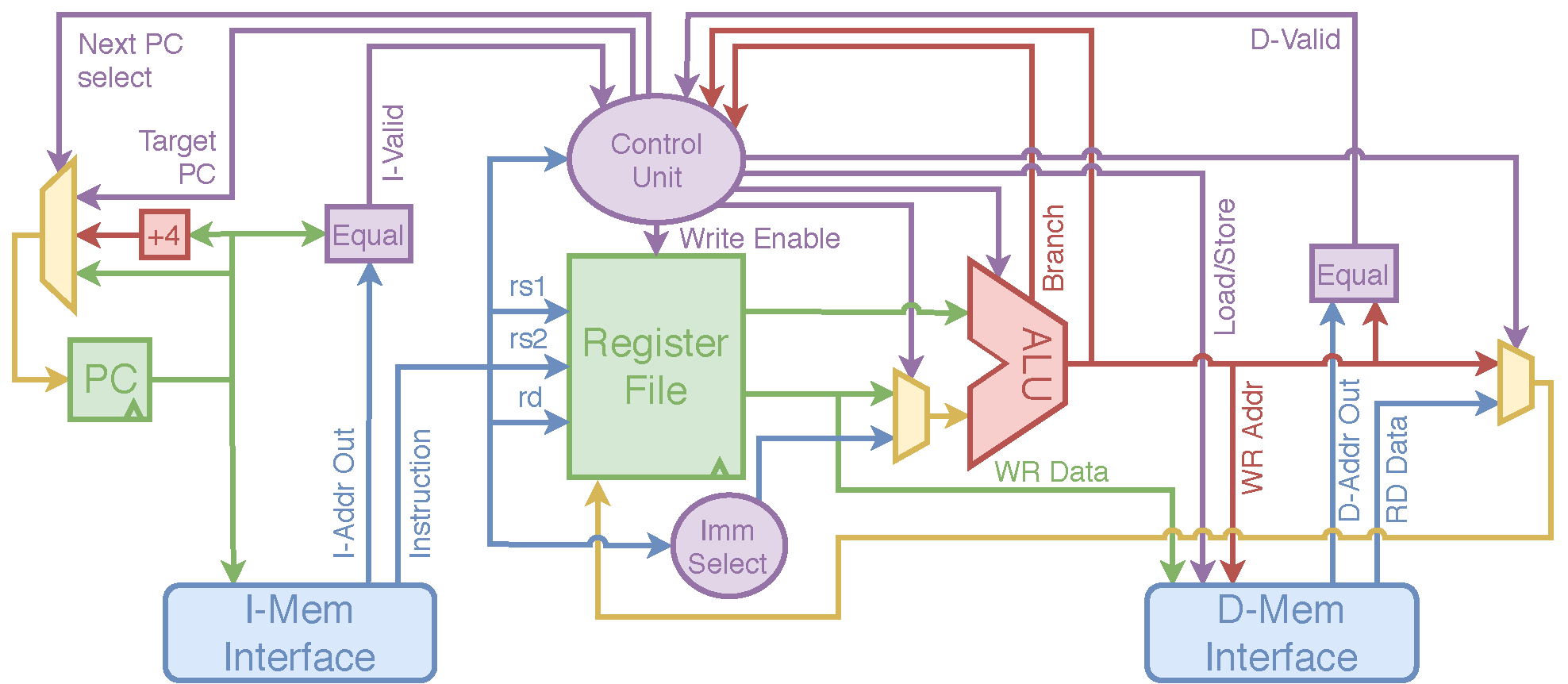

4.1 Single Cycle

The single cycle core combines the base fetch issue, fetch receive, decode, execute, memory issue, memory receive, and writeback modules into simple in-order RV32I core. A dedicated control module is used to generate control signals for each submodule in the core.

The single cycle top, single cycle BRAM top, and single cycle cache top modules each use the single cycle core with asynchronous SRAM memory, FPGA BRAM memory, and a cache hierarchy respectively. Several test benches, with different test programs, are included for each top module.

To run a top level test bench, execute the run test script from the single cycle directory:

./run_test tb_single_cycle_BRAM_top_gcd

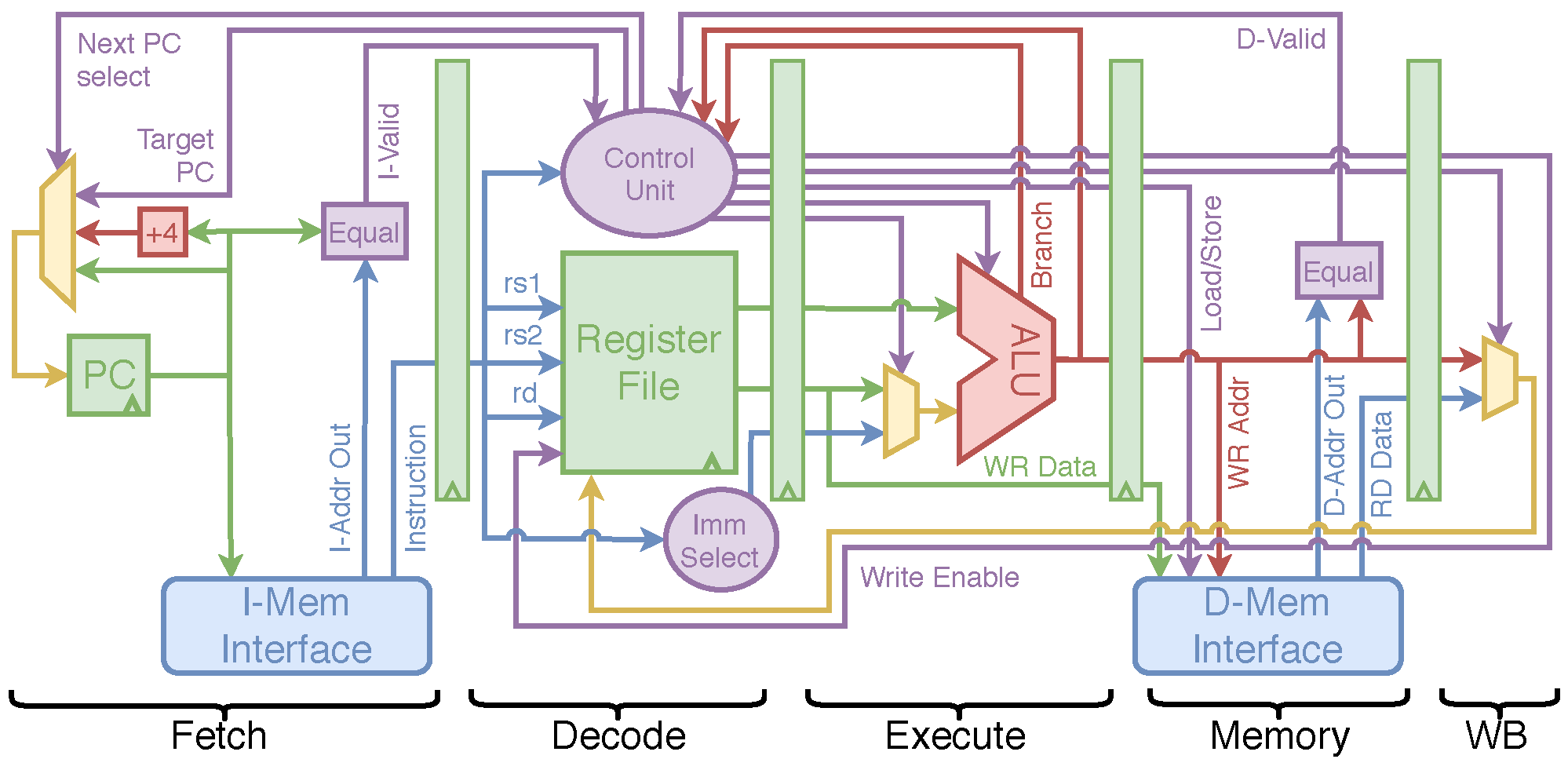

4.2 Five Stage

The Five Stage Core is an RV32i core and includes Fetch, Decode, Execution, Memory, and Writeback stages. The fetch and memory stages each take a single cycle, i.e the instruction and data memory reads must happen combinationally or the core will stall. If BRAM memories or a cache hierarchy are used, the core will stall every other cycle while the BRAM or cache is read. The seven stage core prevents stalls with an additional pipeline stage between issue and receive stages.

The five stage top, five stage BRAM top, and five stage cache top modules each use the five-stage core with asynchronous SRAM memory, FPGA BRAM memory, and a cache hierarchy respectively. Several test benches, with different test programs, are included for each top module.

To run a top level test bench, execute the run test script from the five stage directory:

./run_test tb_five_stage_BRAM_top_gcd

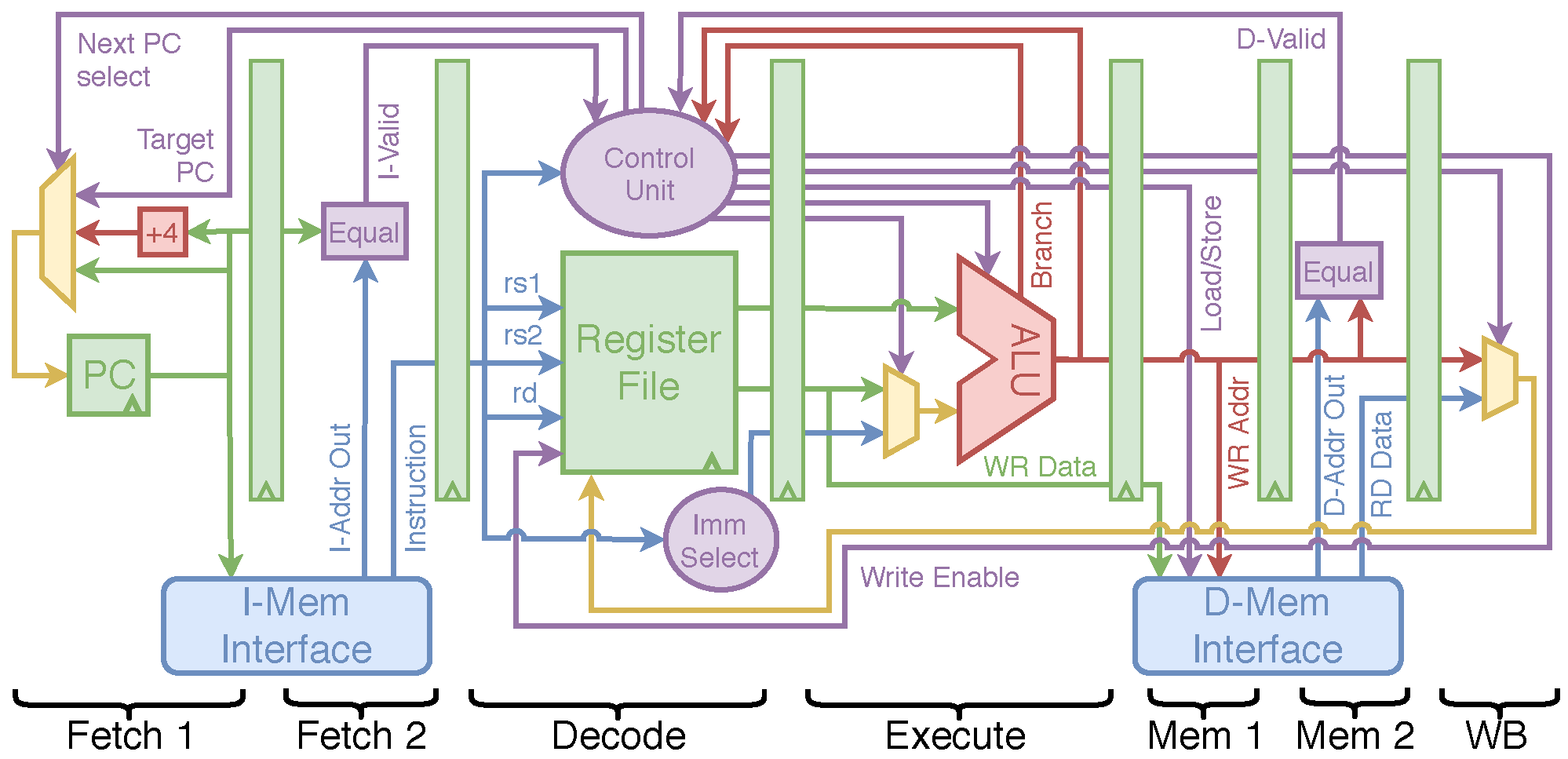

4.3 Seven Stage

The Seven Stage Core supports both RV32i and RV64i configurations. The 32-bit and 64-bit configurations are selectable with the DATA_WIDTH parameter in the top module or simulation test bench. The seven stages include Fetch-Issue, Fetch-Receive, Decode, Execution, Memory-Issue, Memory-Recieve, and Writeback. Using two stages for fetch and memory allows FPGA BRAMs to be accessed without pipeline stalls.

The seven stage BRAM top, and seven stage cache top modules each use the seven-stage core with FPGA BRAM memory and a cache hierarchy respectively. Several test benches, with different test programs, are included for each top module.

To run a top level test bench, execute the run test script from the seven stage directory:

./run_test tb_seven_stage_BRAM_top_gcd

4.4 Seven Stage Privilege

The Seven Stage Privilege Core supports RV64im and a large subset of the RISC-V privilege specification. Supported privilege modes include M (machine), S (supervisor), and U (user). The ECALL instruction traps as well as timer and software interrupts are supported. Future versions of this core will continue to build support for additional privilege specification features, including virtual memory.

The seven stage priv BRAM top module used the FPGA BRAM memory to build a complete processor system. Several test benches, with different test programs, are included for the seven stage priv BRAM top module.

To run a top level test bench, execute the run test script from the seven stage privilege directory:

./run_test tb_seven_stage_priv_BRAM_top_gcd

4.5 Out-of-Order

The Out-of-Order Core supports RV64im ISA. The Re-order buffer depth is configurable with the ROB_INDEX_WIDTH parameter. Load buffer and store buffer depth is configurable with the LB_INDEX_WIDTH and SB_INDEX_WIDTH parameters. Most rv64i instructions only require a single cycle to compute in the ALU. However, division instructions can take up to 64 clock cycles and provide an easy way to observe out-of-order behavior in simple programs.

The Delta Processor top module uses a cache hieracht and FPGA BRAM main memory to build a complete processor system. A test bench with the GCD test program is included for the top module.

To run the top level test bench, execute the run test script from the Delta Processor directory:

./run_test tb_delta_v1_gcd

5. Simulating the Trireme Platform with Modelsim

The Trireme Platform includes scripts to simulate the included test benches with Modelsim from the command line. Simulations can also be performed with the Modelsim GUI. The remiander of this section assumes you have Modelsim installed and are comfortable using it.

5.1 Adding Modelsim to your PATH

The included scripts assume that your Modelsim installation is included in your $PATH variable. To add Modelsim to your $PATH variable, execute the following command or add it to your bashrc file:

export PATH=$PATH:$HOME/intelFPGA_lite/18.1/modelsim_ase/bin

Note that the command above assumes you have the Altera Starter Editoin v18.1 of Modelsim installed in the default location (your home directory). If you have a different version of Modelsim or if you have changed the install location, update the command to include the correct path to the installation’s bin directory.

5.2 Compiling Modules in Modelsim

A TCL script is used to compile a test bench and the modules it instantiates. The provided load.do script in the modelsim directory will compile every module and test bench in the Trireme Platform with the appropriate arguments.

By default the TCL variable comple arg is added to each compile command to provide compiler arguments common to each module. Currently this variable is used to define a Verilog macro that points modules to an include file with more Verilog parameters. If you wish to simulate custom modules with the provided scripts, add the following TCL command to modelsim/load.do to compile the modules and add them to the modelsim library named “work”:

vlog -quiet $compile_arg relative/path/to/your/modules

5.3 Simulating Modules

One can simulate any test bench in the Trireme Platform with the run test script in the modelsim directory. The run test script must be executed from the modelsim directory. When the run test script is executed, first, it will execute the load.do TCL script to compile all of the modules. Then the script will simulate all of the test benches entered as command line arguments.

5.4 Including .vmh Files

Make sure to include any necessary memory image (.mem or .vmh) files in the modelsim/binaries directory before simulating a test bench. Modelsim assumes file paths given the to the $readmemh system task are relative to the location Modelsim was launched from. To simulate modules in the Trireme Platform, Modelsim should always be launched from the modelsim directory. The default memory image paths in the included test benches are relative to the modelsim directory.

6. Writing Software for The Trireme Platform

As a complete design space exploration platform, The Trireme Platform provides several example programs and tools to compile them. After a program is written and compiled, it can be executed on the hardware architecture. Currently software must be executed “bare-metal” without an operating system. The Trireme Platform supports a limited subset of the C standard library. Complete standard library support is a work in progress. Dynamic memory allocation is supported with “malloc” and “free” functions. Other standard library features, such as file I/O, have not been implemented yet.

Programming architectures with multiple HARTs are supported with multiple “main” functions for each HART. HART (core) 0 executes the “main” function. Additional HARTs in the system will execute a “hartN main” function where “N” is the HART number. HARTs that fetch instructions from the same memory can be grouped into a single C file with multiple main functions. Example programs are included in the software/applications/src directory.

7. Compiling Software for The Trireme Platform

This section describes how to compile software for The Trireme Platform and assumes you already have the GNU RISC-V tool-chain installed.

Compiling software for the Trireme platform is accomplished through the compiler script “trireme gcc”, which is found within the software directory of the Trireme Platform. “trireme gcc” is a wrapper around the RISC-V GCC (GNU Compiler Collection) . As such, the script provides the same interface one would expect from the GCC program.

The Trireme Platform executes software on “bare-metal” meaning an operating system is not used to set up the execution environment for a program. Instead, the compiled binary must include any environment setup necessary to execute the program properly. Programs compiled with trireme gcc will automatically have the necessary environment setup needed to run on the Trireme platform.

The compiler script supports all arguments that are supported by the GCC program. These arguments are simply forwarded to GCC for compilation, assembly, and linking. In addition, the script allows the user to specify arguments to control compilation for the Trireme bare-metal environment.

Executing: ./trireme_gcc or ./trireme_gcc --help lists the various compiler script specific arguments. For convenience, GCC and compiler script arguments can be intermixed without disturbing GCC invocation.

The Trireme compiler script arguments fall into three categories: memory layout, libraries, and output files. The majority of arguments belong to the memory layout category, which controls the structure of the compiled program. Before using the compiler script, it’s important to know where your tool chain is installed on your machine. By default, the Trireme compiler script assumes the RISC-V tool chain is installed in “/opt/riscv”. This was specified when the tool chain configuration script was invoked. A different prefix can be specified through the --toolchain-prefix argument.

–start-addr Users can set the start address of the program for a Trireme processor by specifying the --start-addr. The start address must be in decimal and aligned on a word boundary (i.e., a multiple of 4). If the Trireme compiler script is invoked without specifying this argument, the default start address of 0x0 will be used. For compilation targeting multiple cores, it is important to note that each core will share the same start address.

–stack-addr The program stack address is specified through the --stack-addr argument. The stack address is specified in decimal. The stack address must be a multiple of 16 Bytes. In RISC-V, the stack grows from high addresses to low addresses; therefore, this address should take into account the stack size. Trireme does not currently have run-time protection for stack overflow. Therefore, to minimize possible corruption of program data, the stack address should be selected such that there is enough of a gap between the stack end address and the stack start address. For both single core and multi-core configurations, the --stack-addr corresponds to the core 0 starting address for the stack. The default value of this argument is 2048. For multi-core configurations, each core is assigned its own stack segment.

The compiler script uses the --stack-size argument to determine stack start addresses for each core after core 0. Starting from core 0’s stack address, each successive core stack address is o.set by stack-size bytes from the previous core’s stack start address. Values for this argument should be a multiple of 16 and in decimal. The default value for this argument is 2048.

–heap-size The Trireme compiler script places the heap segment at the end of the data section. This cannot be changed by the user. However, the user can specify the size of the heap segment with the --heap-size argument. Setting this argument to 0 will tell the compiler script that no heap is needed. Values to this argument should be a multiple of four and in decimal. The default value for this argument is 0.

–num-cores To target a multi-core design, --num-cores option is provided. If the number of cores specified is more than one, the compiler script will expect the entry points defined for each core. Please refer to section 5 for more details. By default, the number of cores is set to 1.

–ram-size The main memory size of the target platform is specified through the --ram-size as a decimal value argument. By default this value is set to 2048. It is important that this value be large enough to contain all the data and text of the program.

–link-libgloss Trireme implements a Board Support Package (BSP) which acts as a low level Operating Sys.tem Support Layer. Trireme BSP is in the form of a library that can be optionally linked to programs with the --link-libgloss flag. Linking the BSP enables support for the standard C library, including printing to stdout (e.g., puts, printf) and dynamic memory allocation (e.g., malloc, free). Trireme BSP only supports a subset of the POSIX system calls. As such, not all programs can link successfully for the Trireme platform. Before using this flag, the Trireme BSP must be built.

–{vmh, dump, raw-binary} By default, the compiler script will only generate a “.elf” file. Three other output files supported are “.vmh”, “.bin” and “.dump”. The “.vmh” file can be used to initialize memory in simulation or FPGA’s BRAMs in synthesis. Specifying the argument --vmh <file path> will generate a vmh file at the provided file path. The “.dump” file contains contents of an objectdump disassembly which can be useful for debugging. Specify --dump <file path > to generate a “.dump” file at the given file path. Specify --raw-binary to create a raw binary. The elf headers and metadata are removed from this binary so that it can be directly executed when uploaded to an FPGA implementation with a bootloader program. All three arguments can be specified simultaneously.

–trireme-lib-path The Trireme compiler script can be invoked from directories other than “software”. If the com.piler script runs from another directory, the Trireme library path must be specified using the --trireme-lib-path. By default this points to the “software/library” directory where the BSP will be installed after being built. Note that this is only necessary if --link-libgloss is used.

More system and compiling directions can be found [here].

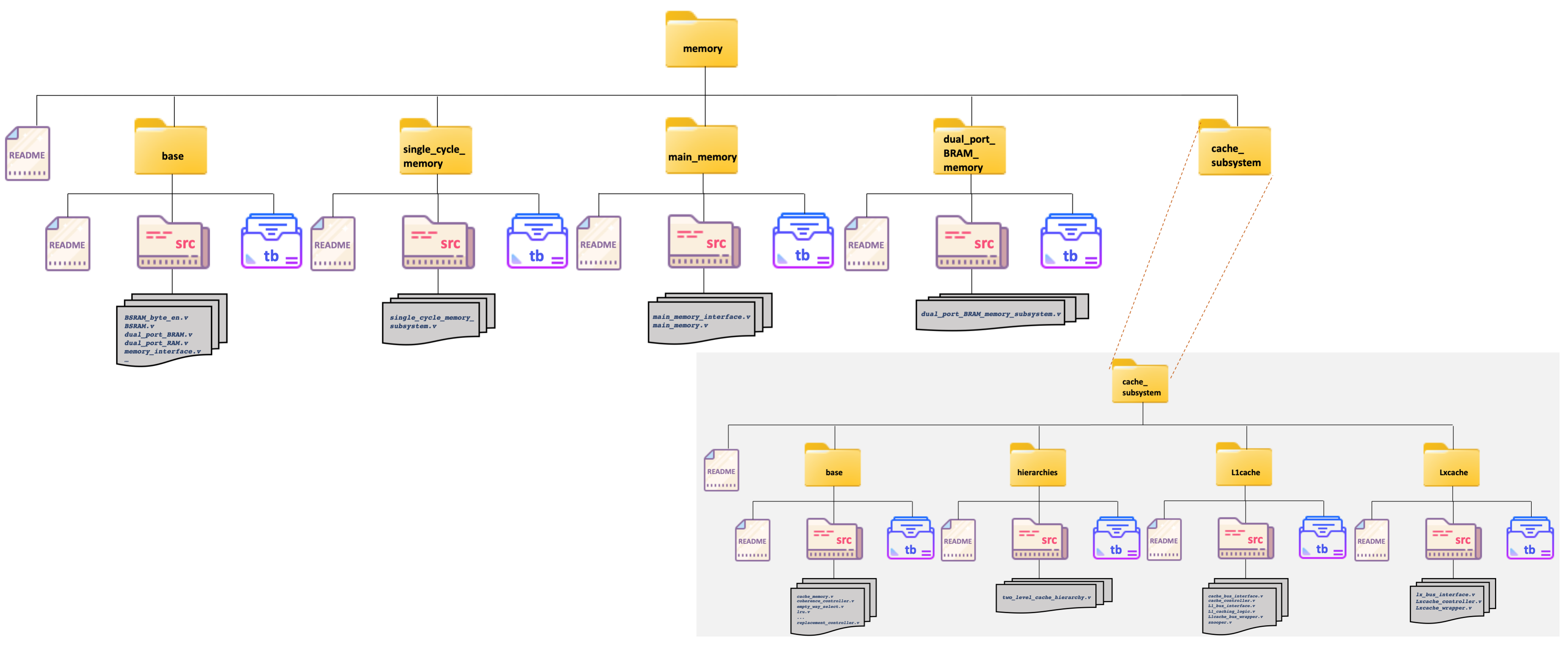

8. More Fine-Grained Folder Structure